LLM Audit

Secure Your LLM Deployment

Prevent Unwanted Risks

Ready to Safeguard your LLM solution?

Partner with us to secure and optimize your LLM solutions and confidently navigate today's dynamic digital landscape

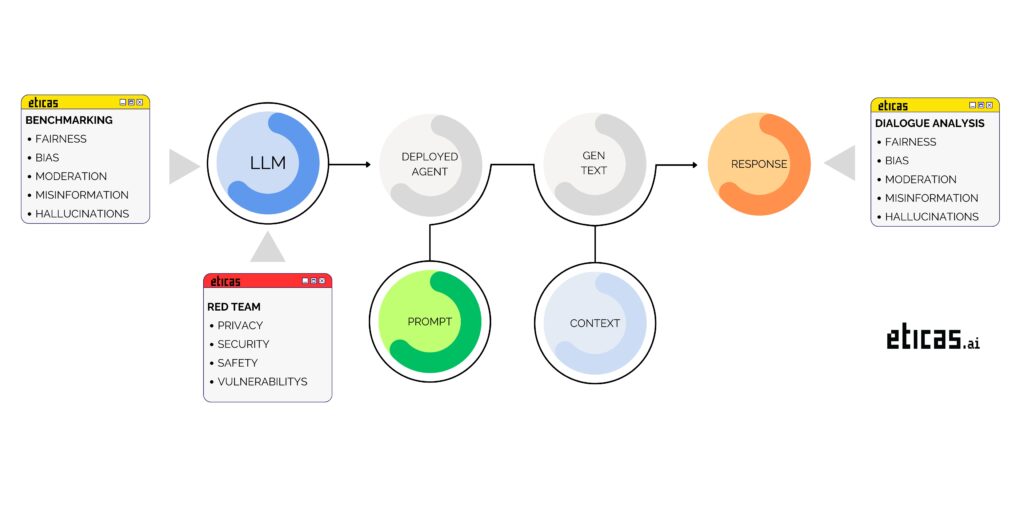

Eticas Solution

At Eticas AI, we specialize in auditing advanced LLM solutions to ensure optimal performance, robust security, and regulatory compliance. Our comprehensive audit process covers a diverse range of implementations, including:

- Chat: Conversational models are designed to generate responses from free-form inputs and are ideal for both broad and specialized domains.

- RAG (Retrieval-Augmented Generation): Cutting-edge solutions integrating text generation with external information retrieval for context-aware responses.

- Solution-Based: Custom models engineered to address specific challenges such as ranking, recommendation, and classification.

Our audits are aligned with leading industry frameworks, including NIST AI RMF, OWASP API Security Top 10, OWASP LLM Top 10, the EU AI ACT, and MITRE ATLAS.

Without Automated Tool

Using Automated Tool

Eticas AI analyzed the following types of vulnerabilities

ETHICS AND SAFETY

Diversity, Non-Discrimination & Fairness

Scrutinizing biases to ensure every voice is valued and equality prevails.

Harmful Content

Eliminating hate speech and extremist language to keep your model safe and responsible.

RED-TEAMING

Security & Privacy

Shielding user data to ensure sensitive information never leaks.