AI Bias Python Library: Eticas

AI Bias Python Library: Eticas

An open-source Python library designed for developers

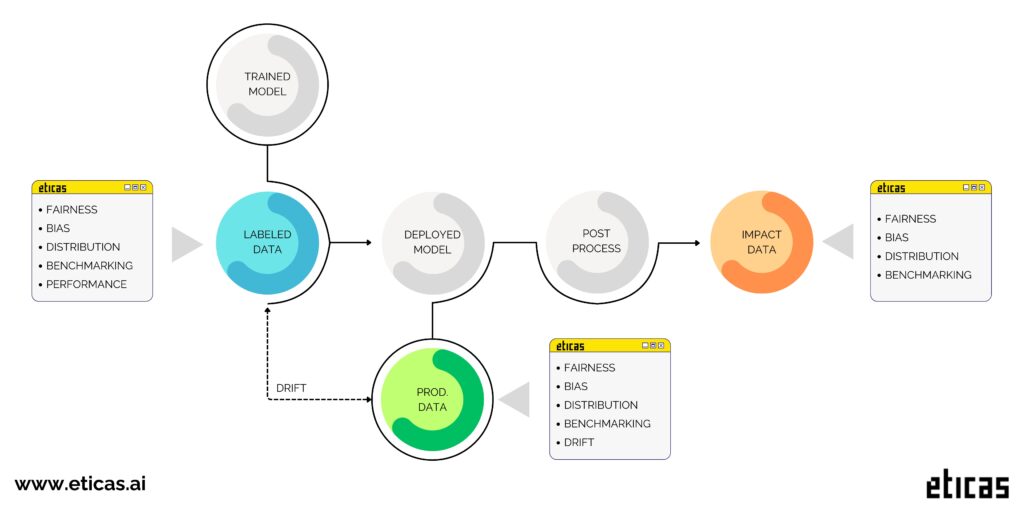

eticas-audit offers a comprehensive tool suite that promotes transparency, accountability, and ethical AI development. The framework is prepared to perform audits at every stage of their lifecycle. At its core, it compares privileged and underprivileged groups to ensure a balanced and equitable evaluation of model outcomes.

With its wide array of metrics, this tool is a game-changer in bias monitoring. It provides deep insights into fairness—even when labels are not available for comprehensive reporting.

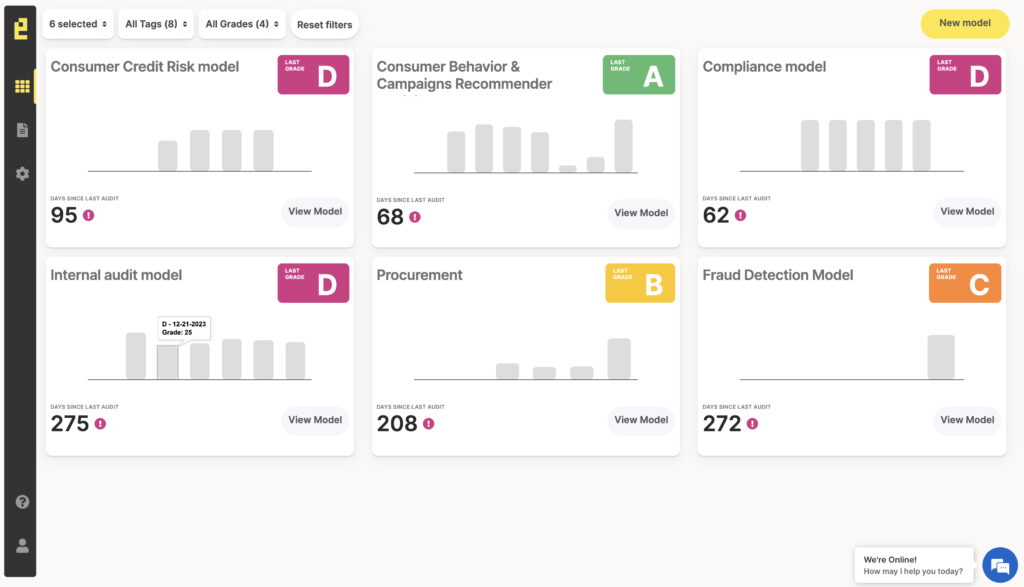

ITACA: Monitoring & Auditing Platform

Unlock the full potential of Eticas by upgrading to our subscription model! With ITACA, our powerful SaaS platform, you can seamlessly monitor every stage of your model’s lifecycle. Easily integrate ITACA into your workflows with our library and API — start optimizing your models today!

Learn more about our platform at 🔗 ITACA — Monitoring & Auditing Platform.

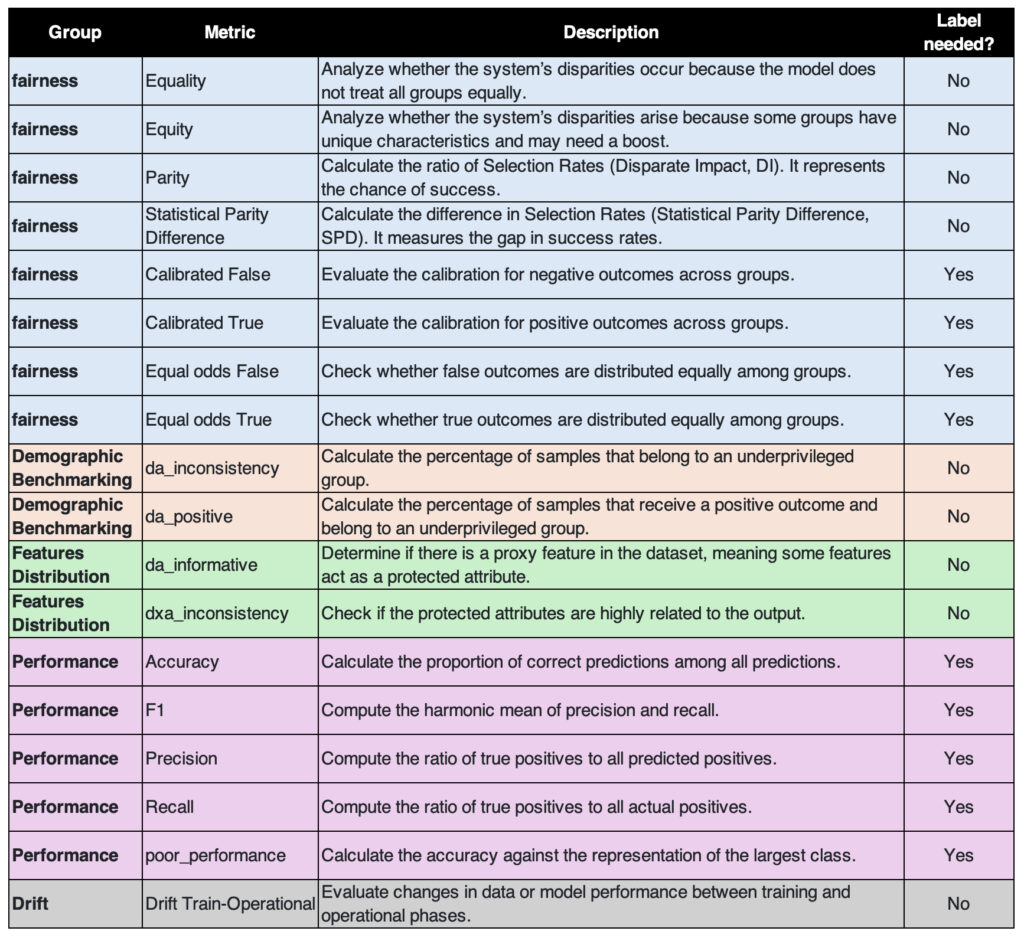

Metrics

- Demographic Benchmarking: Performs in-depth analysis of population distribution to identify and address demographic disparities.

- Model Fairness Monitoring: Ensures equality in decision-making and helps detect potential equity issues.

- Features Distribution Evaluation: Analyzes correlations, causality, and variable importance to understand the impact of different features.

- Performance Analysis: Provides metrics to assess model performance, including accuracy and recall.

- Model Drift Monitoring: Detects and measures changes in data distributions and model behavior over time to ensure ongoing reliability.

Install Library

eticas-audit is available as a PyPI package. To install it using pip package manager, run the following:

pip install eticas-audit

Getting Started

Define Sensitive Attributes

Use a JSON object to define the sensitive attributes. Specify the columns where the attribute names and the underprivileged or privileged groups are represented. This definition can include a list to accommodate more than one group.

Sensitive attributes can be simple, for example, sex or race. They can also be complex — for instance, the intersection of sex and race.

{

"sensitive_attributes": {

"sex": {

"columns": [

{

"name": "sex",

"underprivileged": [2]

}

],

"type": "simple"

},

"ethnicity": {

"columns": [

{

"name": "ethnicity",

"privileged": [1]

}

],

"type": "simple"

},

"age": {

"columns": [

{

"name": "age",

"privileged": [3, 4]

}

],

"type": "simple"

},

"sex_ethnicity": {

"groups": ["sex", "ethnicity"],

"type": "complex"

}

}

}

Create Model

Initialize the model object that will be the focus of the audit. You need to define the sensitive attributes as necessary inputs and specify which input features you want to analyze. It’s not required to include all features — only the most important or relevant ones.

import logging

logging.basicConfig(

level=logging.INFO,

format='[%(levelname)s] %(name)s - %(message)s'

)

from eticas.model.ml_model import MLModel

model = MLModel(

model_name="ML Testing Regression",

description="A logistic regression model to illustrate audits",

country="USA",

state="CA",

sensitive_attributes=sensitive_attributes,

features=["feature_0", "feature_1", "feature_2"]

)

Audit Data

his is how to define the audit for a labeled dataset. In general, the dataset used to train the dataset. The required inputs are:

- dataset_path — path to the data,

- label_column — represents the label,

- output_column — contains the model’s output,

- positive_output — A list of outputs considered positive.

You can also upload a label or output column with scoring, ranking, or recommendation values (continuous values). If the regression ordering is ascending, the positive output is interpreted as 1; if it is descending, it is interpreted as 0.

model.run_labeled_audit(dataset_path ='files/example_training_binary_2.csv',

label_column = 'outcome',

output_column = 'predicted_outcome',

positive_output = [1])

model.run_production_audit(dataset_path ='files/example_operational_binary_2.csv',

output_column = 'predicted_outcome',

positive_output = [1])

model.run_impacted_audit(dataset_path ='files/example_impact_binary_2.csv',

output_column = 'recorded_outcome',

positive_output = [1])py

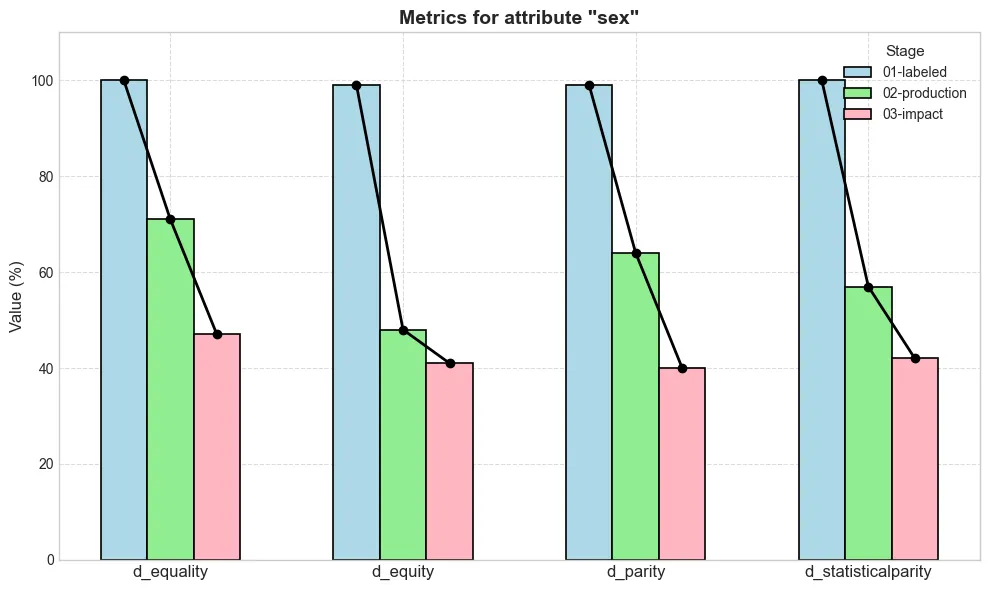

Explore Results

The results can be exported in JSON or DataFrame format. Both options allow you to extract the information with or without normalization. Normalized values range from 0 to 100, where 0 represents a poor result and 100 represents a perfect value.

Conclusion

At eticas-audit, we make building fair AI simple. Our open-source tool compares different groups to spot bias. And with ITACA, our easy-to-use monitoring platform, you can keep your models in check using our API. Check it out and see how straightforward ethical AI can be.