Automated Auditing, Tracking & Certification

Reduce time and cost while controlling your AI Models.

Be aware of Risks

Detect and Control the risk of your generative and predictive AI systems.

Stay compliant

Keep updated in the newest regulations and standards (ISO, EU AI Act, NIST).

Save Time

Reduce development evaluation and production monitoring costs.

ITACA: Assess. Observe. Audit.

Evaluate a wide range of AI systems for bias and fairness, data provenance, misinformation, hallucination, environmental impact and organizational readiness.

Observability Platform

Continuous measurement of performance indicators

Document Compliance

Get an audit report to mitigate and manage the risks.

Safety AI Assesment

Evaluate the unique risks of your use case within your specific industry.

Interactive Demo

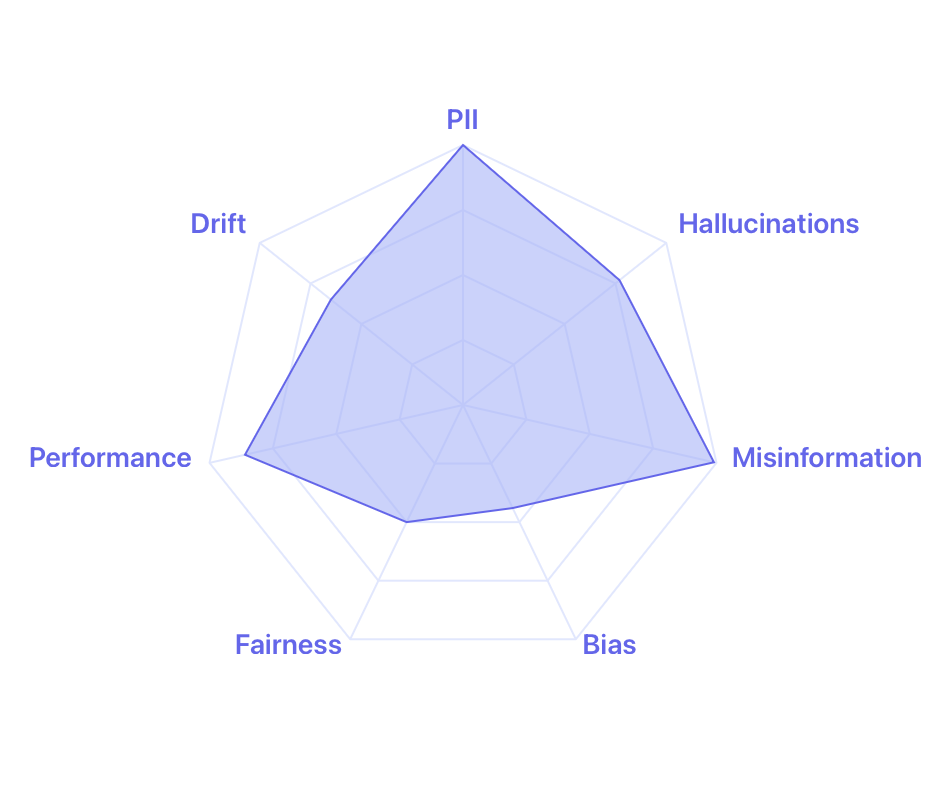

Generative AI

ITACA evaluates Generative AI systems analyzing the following indicators:

- Ethics & Safety: Diversity, Non-Discrimination, Harmful Content

- Red-Teaming: Security, Privacy and Technical Vulnerabilities

- Misuse and Technical Robustness: Misinformation and Hallucinations

- Performance, Fairness & Bias: ensure consistent delivery of high-quality, reliable outputs under diverse conditions and fairness across demographic groups

ITACA evaluates Predictive AI systems across all lifecycle stages, comparing privileged and underprivileged groups to ensure an unbiased assessment of model behavior:

- Demographic Benchmarking: Aligned data respects population distribution

- Fairness: Equality, Equity, Disparate Impact, Calibrated.

- Performance: Accuracy, Performance, Recall, F1

- Features: Correlation, Causality, Explainability.

Predictive AI

Compliance

Our tailored and automated solutions help organizations ensure compliance and mitigate risks across key AI and data governance regulations. We support alignment with major legal and ethical frameworks.

- NIST: AI Risk Management Framework (RMF)

- EU AI Act

- ISO 42001, 24368, 24027

- DSA

- GDPR

- AI Liability Directive

- US Laws: Colorado SB21-169, NYC Law 144, OMB M-24-18, OMB M-24-10AI

- IEEE

- UNESCO AI Principles

At Eticas, we offer expert consulting services that integrate ethics, compliance, and accountability into the full lifecycle of AI systems.

With Eticas as your ethics partner, you gain long-term support to navigate complex AI challenges, demonstrate compliance, and lead responsibly in an evolving regulatory landscape.

Consulting

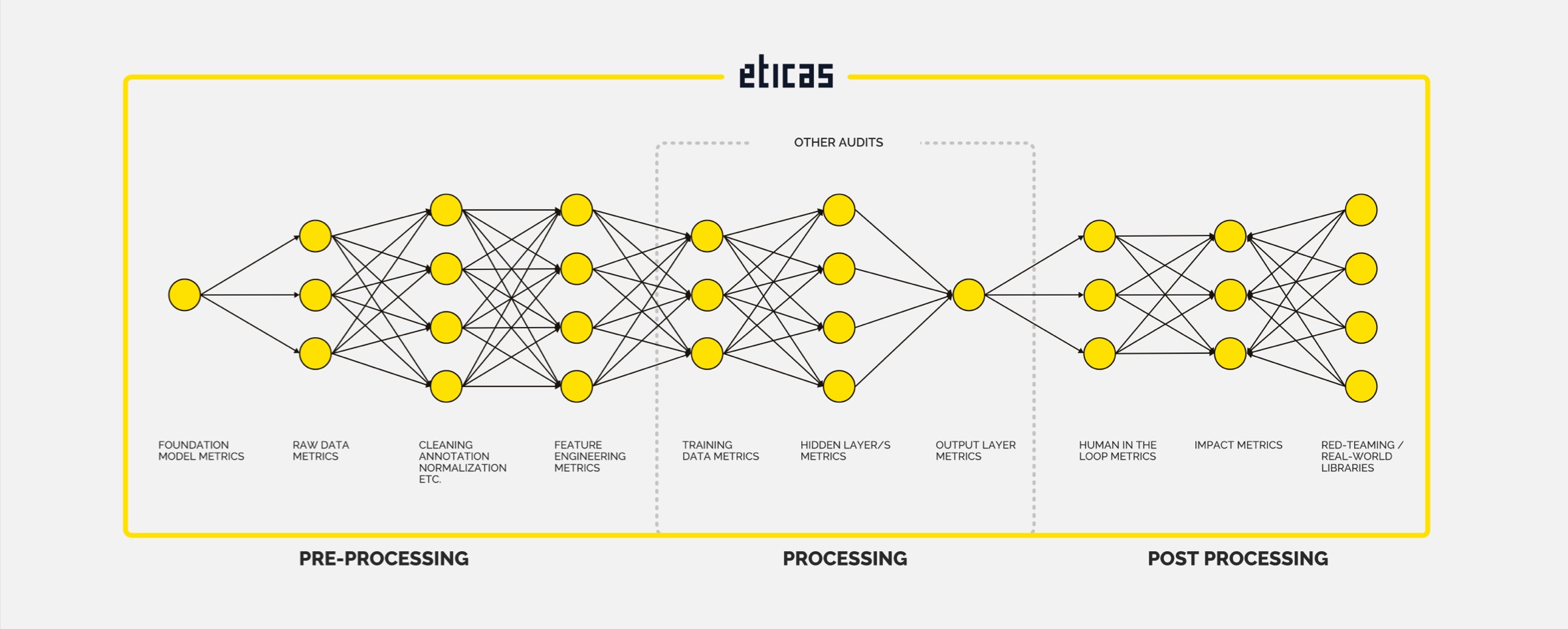

Full Lifecycle AI Audit

Build trust in your models in every phase of the lifecycle with pre-processing, production, and post-processing monitoring.

What our clients are saying

David Whewell

Chief Technology Officer & Head of Ethics Koa Health

"Eticas has been an invaluable partner for us on our ethics journey, with multiple audits under our belt. I am delighted to note that we continue to make great strides as an ethical organization, and this demonstrates we take Eticas’ recommendations seriously, and see the value in championing the ethical approach to digital solutions in mental healthcare. We constantly hear from our partners, our users and our investors that providing these solutions ethically is paramount."

Nadine Dammaschk

GIZ, FAIR Forward – Artificial Intelligence for All

“Eticas has been supporting us in setting up a structured approach for assessing human rights risks in our AI-related activities in sectors as diverse as agriculture, climate change adaptation and chatbot usage. During this journey, Eticas has shown great dedication in tailoring this methodology with us to the international cooperation context, based on their AI Auditing expertise. Partners and colleagues confirmed to us repeatedly the value of the structured risk assessment. We are grateful for the collaboration with Eticas and the significant impact it has had on how we operationalize AI Ethics in our project.”

Jessica Bither

Senior Expert for Migration at Robert Bosch Stiftung GmbH

“In our work with Eticas, we have greatly valued the in-depth subject expertise of the team and the high-quality research they are able to provide on complex socio-technological questions and issue areas.”